Bibliographic Data and Rankings¶

This chapter is based on the notes 2022-05-24_SPSE_Rankings.pdf

Bibliographic Data¶

Example of DBLP XML:

<dblp>

<script/>

<inproceedings key="conf/tacas/Beyer22" mdate="2022-04-29">

<author orcid="0000-0003-4832-7662">Dirk Beyer 0001</author>

<title>Progress on Software Verification: SV-COMP 2022.</title>

<pages>375-402</pages>

<year>2022</year>

<booktitle>TACAS (2)</booktitle>

<ee type="oa">https://doi.org/10.1007/978-3-030-99527-0_20</ee>

<crossref>conf/tacas/2022-2</crossref>

<url>db/conf/tacas/tacas2022-2.html#Beyer22</url>

</inproceedings>

</dblp>

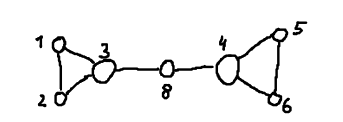

Crossrefs:

ORCID

0000-0003-4832-7662Conference

conf/tacas/2022-2

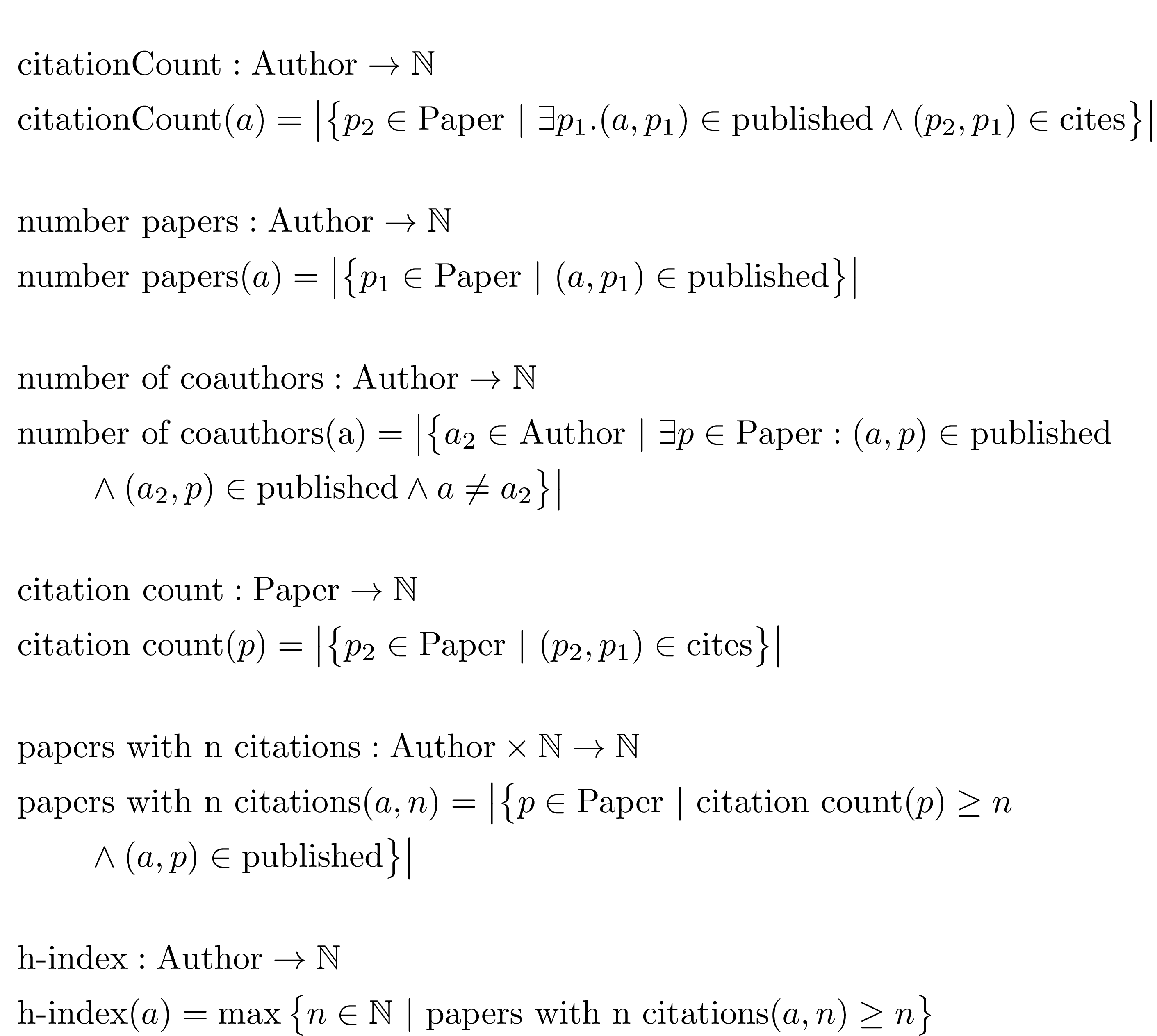

Measurements and Rankings¶

Be careful about the interpretation of measurements!

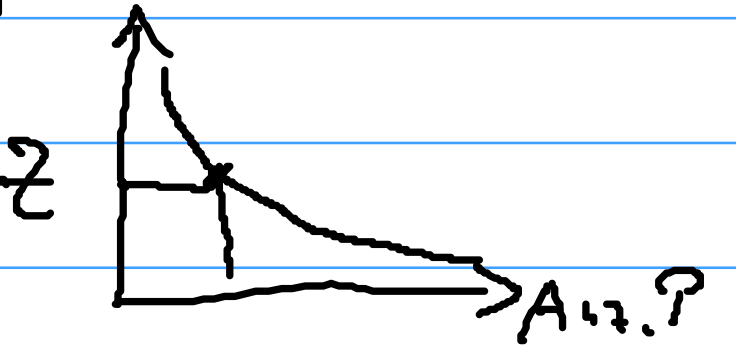

Operational computation of h-index:

Sort all papers of authors in decreasing order of citation count.

Count downwards as long as citation count >= counter.

Example:

Publication A: 5 citations

Publication B: 10 citations

Publication C: 3 citations

Publication D: 1 citation

Publication E: 7 citations

Sorted:

B 10

E 7

A 5

C 3

D 1

Count until 3 => h=3

Common understanding of h-index for a person:

> 10: W2 professor

> 20: W3 professor

> 30: excellent reputation

> 40: outstanding

Heavily biased:

age of scientist/journal/institution

area of research (some cite more broadly, others more narrowly)

collaboration culture

size and volume of institutions/conferences/journals

increasing number of publications per year (reference)

Other measures:

h5 for publication venues (h-index for publications of last 5 years)

i10 for people (#papers >= 10 citations )

Why Rankings?¶

Milestones / career steps

Quantify objects

Select universities (by rank, research fields, …)

Funding decisions

Hiring decisions (select University/Department/Professor for your PhD)

Legitimization of decisions

Select publication venues

Important Rankings and Platforms¶

Google Scholar: Scientists (h, i10, #citations, #papers) and Venues (h5)

Core Ranking (Australia): Conference rankings by tier (A*, A, B, C, …)